VR is not new but does draw newfound attention to it with its recent uprising. To stay at the forefront of technology, KLM started a department called "KLM XR Center of Excellence," or XRCOE for short. XRCOE makes VR training for the KLM crew and tries out many new technologies to see if they could fit the company's needs. Furthermore, there's research being done to see if AR could be implemented in some operations within KLM. As of September 1st, 2021, I am researching AR within XRCOE.

The rest of this report will be about the different projects I've been a part of and my role. In the end, I will give an overview of my obstacles, accomplishments, and my current occupation at KLM.

KLM already taught me a lot about how to navigate within a big company. It's a different way of getting things done and getting new stakeholders onboard while coordinating with the other departments involved. It also allowed me to test my interaction design skills in a new medium. VR interaction is much different from flat-screen interactions. Users expect things to behave similar to real life, or talents follow some fundaments of physics. Feedback is, therefore, a vital tool we should correctly implement while developing VR training.

The rest of this report will be about the different projects I've been a part of and my role. In the end, I will give an overview of my obstacles, accomplishments, and my current occupation at KLM.

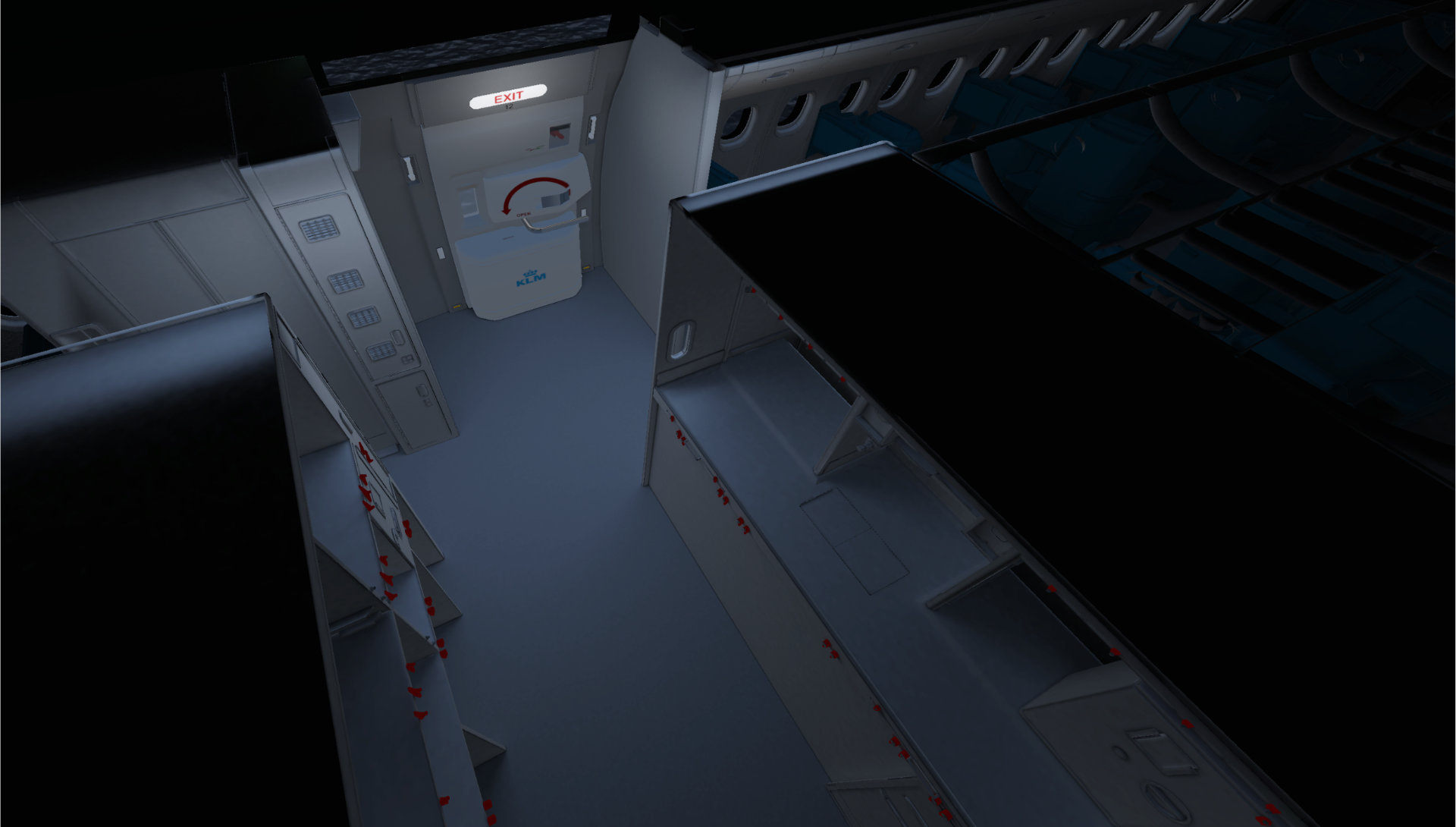

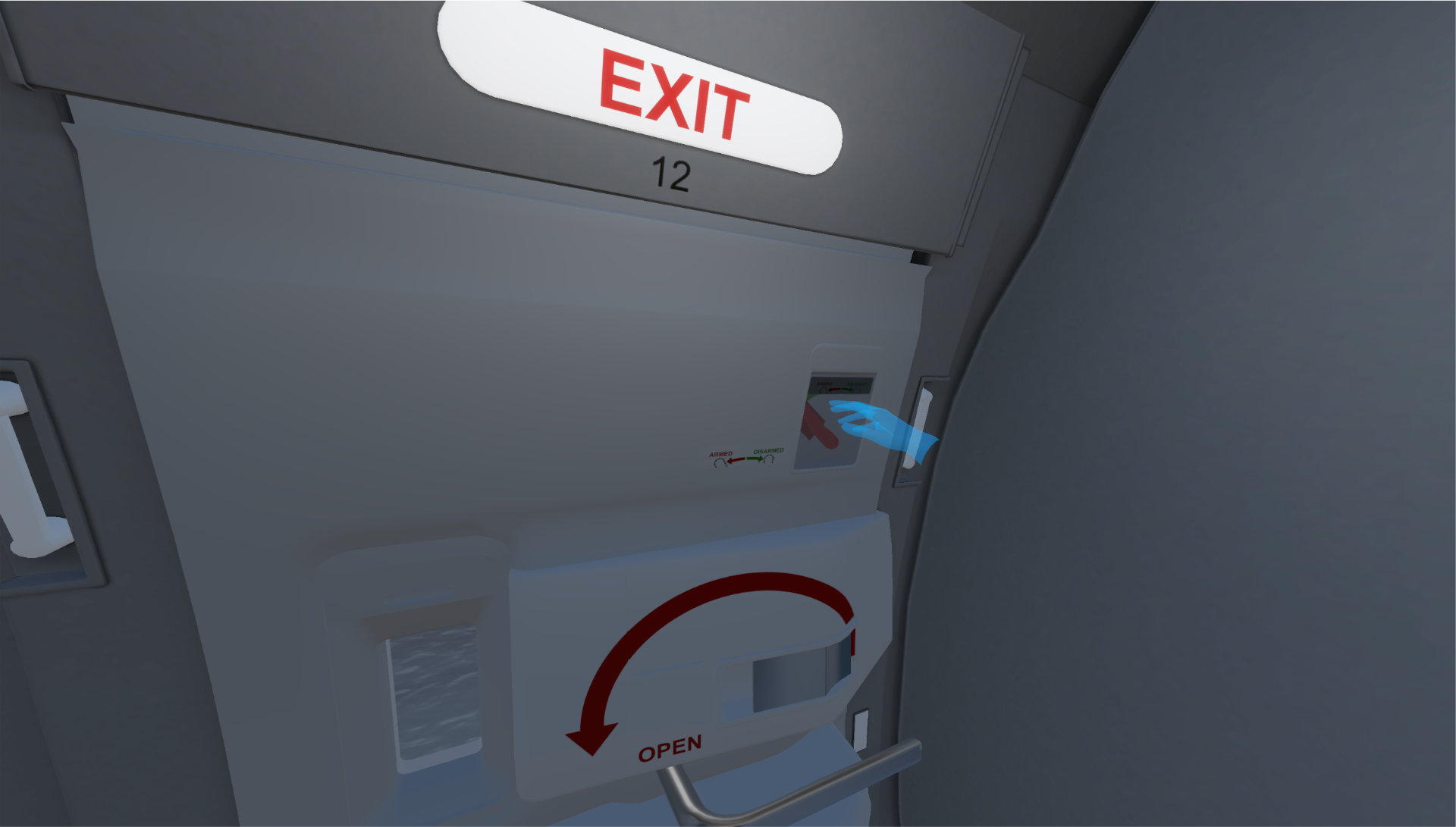

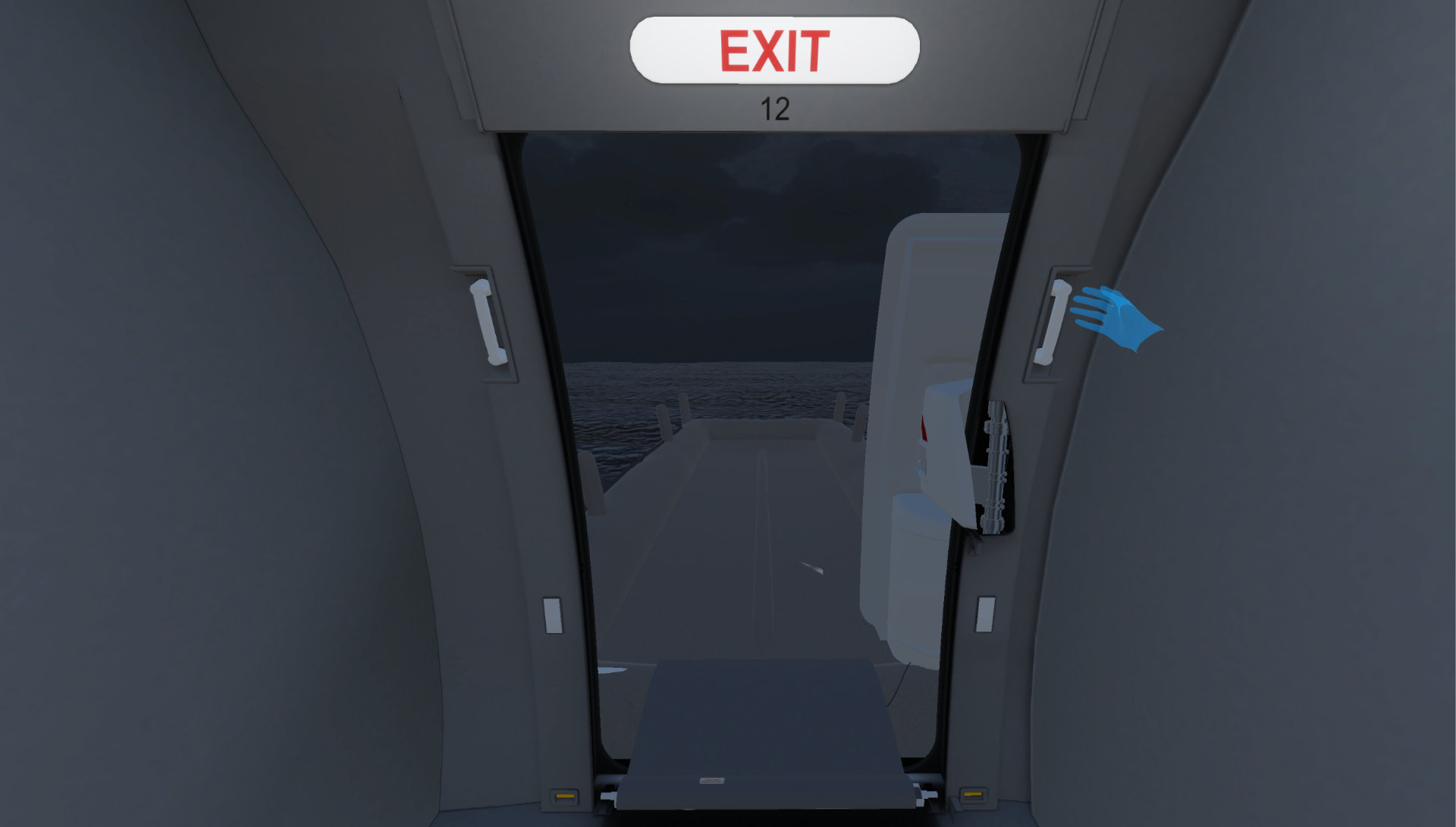

The slide raft training trains the cabin crew on what to do after an emergency landing on water. The cabin crew has to perform a couple of checks before opening the door and deploying the raft. Our team developed the training for the Oculus Quest 2 and iPad. We also visited one of the hangars at KLM to experience opening an actual Boeing 737 door and gather reference material through pictures and lidar scans.

I had to convert cad files to usable FBX format for unity. In short, it means a high-resolution 3D model had to be converted into a lower resolution model while still having enough detail for user emersion. The Quest 2 is equipped with a mobile processor. Therefore, it can not handle that many polys (comparable to 3D pixels). Models far away have a lower 3D resolution than models near the user.

The models did not come with any textures, so I had to create them. I used the images I gathered from our hangar day to recreate all the stickers and labels. I also baked all the lighting into the textures, so the oculus quest and iPad don't have to calculate lighting on runtime. For those who need a short explanation of textures, it's an image wrapped around a 3d model to give it more detail or colors. There are all sorts of textures with different kinds of purposes.

Users need to know if they do something correctly. This is done with a simple visual sign and sound. I created two visuals in adobe after effects and some sounds in Ableton Live. These are played whenever the user does something right or wrong.

We had an official test day with trainees and trainers from the KLM training department and tested our VR training. A lot of test gathering is done incorrectly. Gatherers may ask questions with their biases already in the question. A simple example of this is the difference between "did you think the training was good?" And "what did you think of the training." Because of this, we worked out a testing method where we just gave the test person a VR headset and started the app for them. I urged that it was a key point of testing not to help the tester. The rest of my team was there to set up the training for each user while I asked them questions about their experience after they were done. In the end, we gathered a lot of meaningful feedback we could further implement in developing the slide raft training.

The iPad version is identical to the VR version, except that looking around is done with swiping your finger over the screen. I spend most time translating the procedure to automated events, so iPad users can still experience all the steps correctly. The camera automatically moves through the digital space when needed or when showing a procedure. For example, in VR, the user has to check the outside by physically moving their head to the window, while on an iPad, the user only has to touch and hold the window. The camera then moves itself to the window and back. The iPad version has a user interface with the progress shown in the bottom left corner and a menu button for restarting the training and adjusting the camera speed when looking around. When a user touches an object, they would have to hold that item to perform the check. A circle will appear and grow, indicating that the user is indeed pressing on an interactable object. This is done to combat spam clicking.

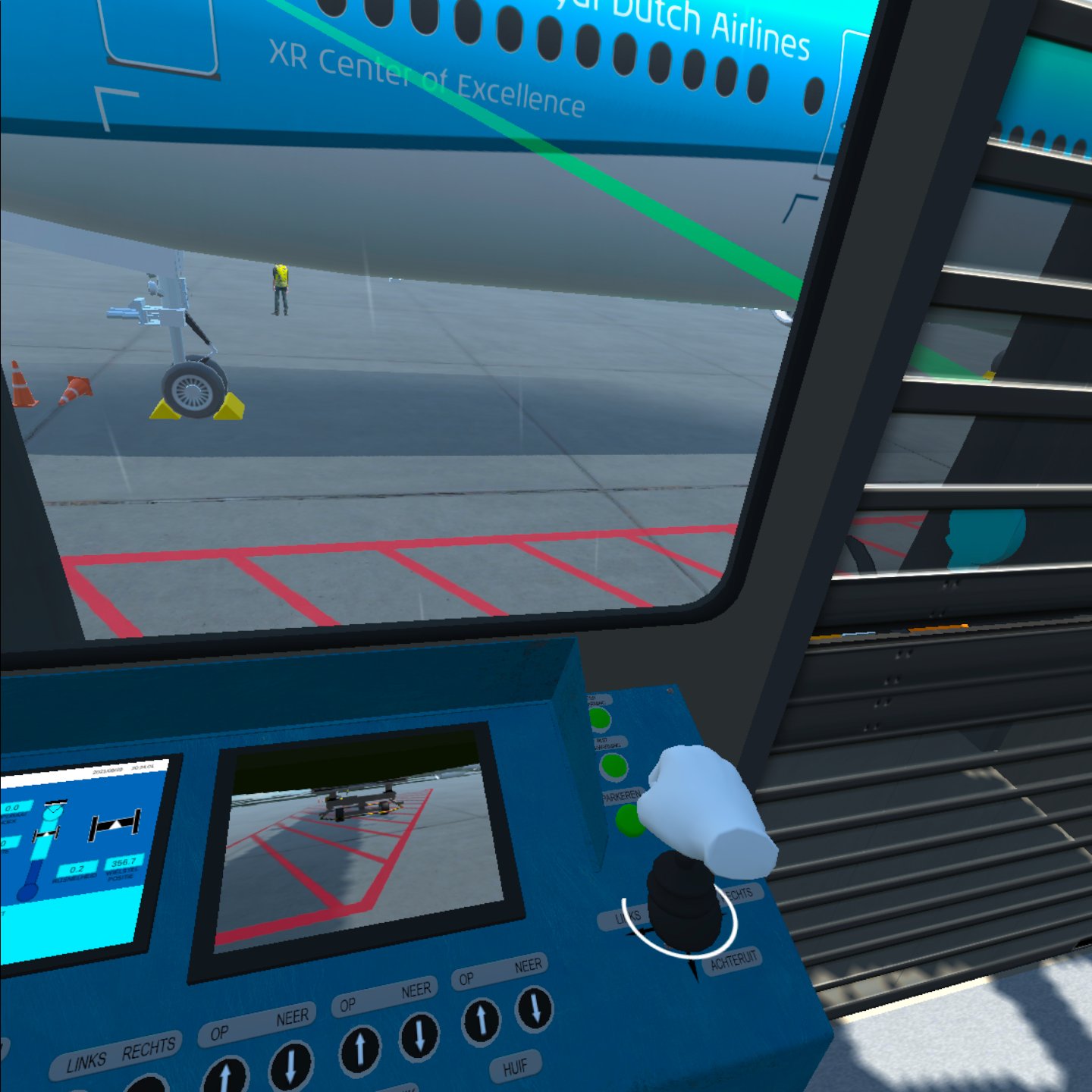

The JetwayBridge training(internally known as the passenger bridge) is meant to pre-train student bridge operators. Students only practice operating the jetway bridge with real a real bride and a real aircraft with regular passengers. This adds a lot of stress and pressure while practicing for the first time. There's also a cost factor. When a student needs more time docking a bridge, passengers could miss their connecting flights or transportation. In an even worse scenario, students could damage an aircraft, costing KLM millions of euros. This all led to developing VR training for this use case. The training already existed for the HTC Vive but was never successfully ported to the Oculus Quest. It was on me to get the training to work on the Oculus Quest 2.

The training has two modes. One is docking the bridge to an aircraft, and the other is parking the bridge. While parking, the operator has to perform a couple of checks before starting. One of them is looking out of the windows and checking for any nearby workers. If one of the workers walks near the bridge during operation, the operator has to signal them using an alarm, a claxon in this case. The operator then needs to operate the bridge using the control panel and connect it to the aircraft. After the operation, the student operator can view their stats within the VR training. It shows their distance to the optimal docking spot, mistakes made, and the docking time.

Without going into too much detail on operating the bridge, the controls had to feel realistic with the limited feedback a VR controller could give to a user. I made sure the control stick moved like a real control stick would do. In the real jetway bridge, the control stick cannot move diagonally. Operators can only push forward and back or left and right. This had to be recreated in the VR training. I also made sure buttons were visually being pressed in when the player interacts with them. Some code also had to be rewritten for performance optimization. Finally, I had to create transitions from each scene and, of course, a restart button.

I first made a wrapper around all the C# steam libraries for Unity's XR system for fast results. That gave me a quick turnaround time to see how it performed on the Oculus Quest 2. after that, I slowly transitioned to a more native Unity XR system and reprogrammed some of the functionality from the previous team. At last, I focussed on the Interaction from player to system. I added visual feedback, haptics, and a start menu, creating a usable and sellable jetway bridge training.

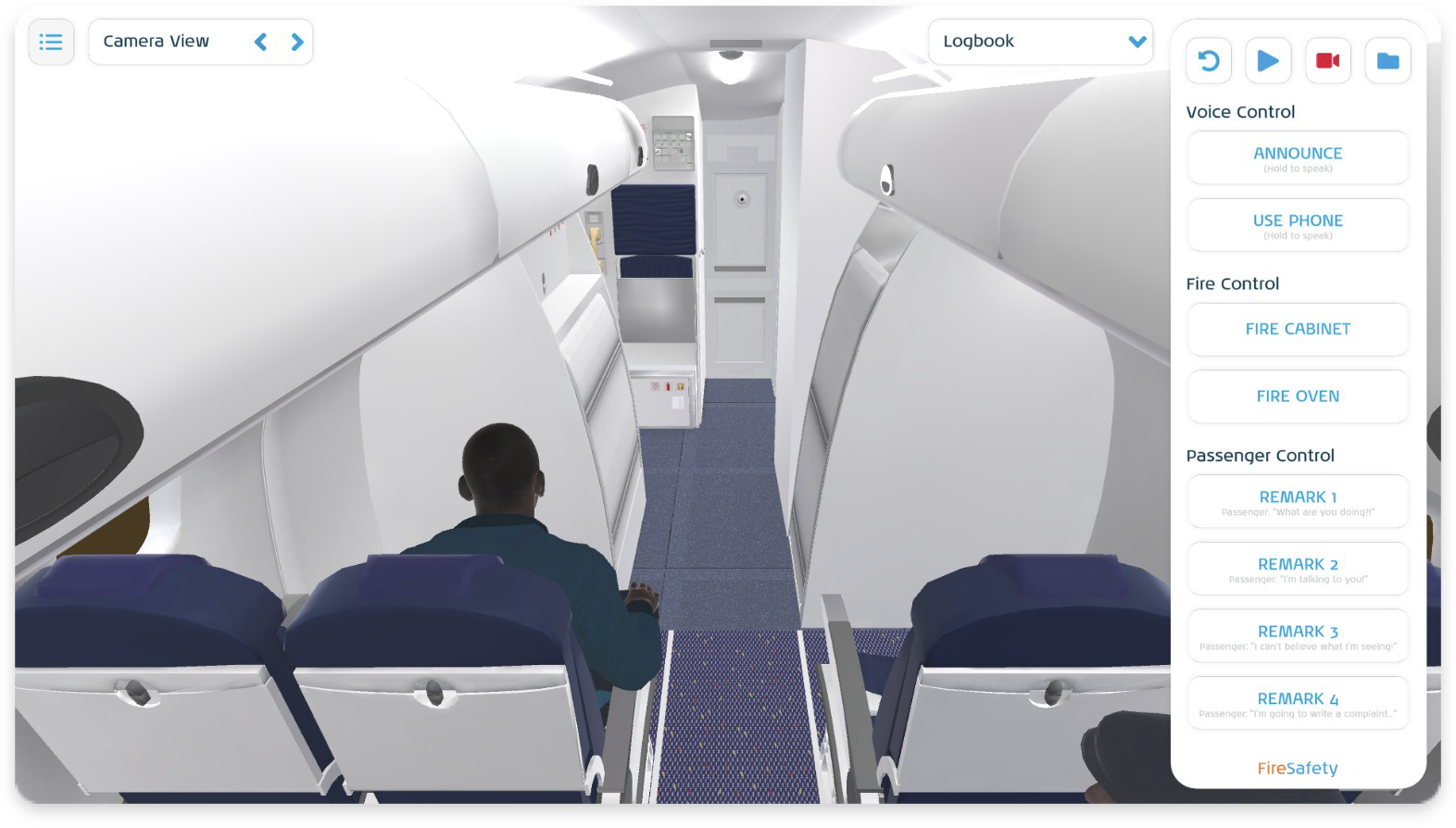

The FireSafety training is a multiplayer training designed to train the cabin crew on what to do when there is a fire inside the cabin of an Embraer 190. there are two possible procedures, fire in the oven or fire in one of the overhead lockers (storage at the top of the cabin). The cabin crew must find the fire's location and follow the correct procedure. The training was already being developed by an old team and got taken over by our new product team.

During the development of the FireSafety training, I was responsible for the user interaction with the doors and lockers. Users needed to be able to open and close them as they would in real life. Users also needed to be able to lock these doors and lockers. Buttons had to be functional, like the button to silence the fire alarm. I was also responsible for the fire extinguisher. It had to function like a real fire extinguisher would work. For example, the fire extinguisher does not function upside down. The extinguisher must not be held stationary but must be moved quickly from left to right but aimed at the core to extinguish a fire successfully. Finally, I was responsible for lighting and UI. Two UI screens had to be created, One for a Trainer (teacher) and one for the Trainees (students). The Trainer needed to make a multiplayer room, start a fire, let NPCs talk, talk to the students and reset the training with a set of buttons. The Trainees had to be able to select and join the correct room in a log-in hub.

After three months of hard work and multiple demos, there was version 1.0, and after close communication with our external and internal stakeholders, there's a usable version of the FireSafety training.

Working with a product team within KLM was a different kind of experience. It taught me a lot about how to change the way people perceive an innovative project in a world where the company wants to innovate but at the same time recognizes its risks. It also allowed me to get my hands on all kinds of hardware, like the Hololens, MagicLeap, and experiment with them. But most importantly, it also gave me a different perspective on working with all different kinds of people, even outside of my team.